Why We Should Analyse Searchbots Behaviour:

Searchbots may not find all our content. How can we know for certain?

[Before diving into this post, please note that a working example of our interactive searchbot analysis reports is available here: Example Visualisations and Analysis of Search Engine Requests]

Do Searchbots Find All Our Content?

Searchbots do their best to find our content, but do they actually find it all? If they don't find all of our content, what do they find? Do they find content that matters?

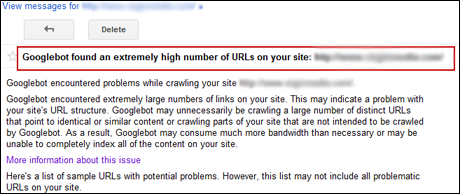

Consider the following message that can appear in Google Webmaster Tools:

One line in particular stands out:

"As a result, Googlebot .... may be unable to completely index all the content on your site"

This message - from Google (click here for further information) - implies that in some circumstances Googlebot may not be able to find all of our content. How can we practically know for sure what Googlebot has or has not found?

How Can We Find Out?

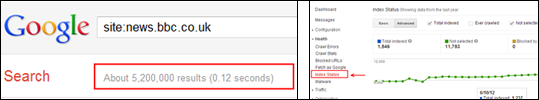

site: query: We could use the 'site:' Query, e.g. site:news.bbc.co.uk, but this is pretty impractical for a site serving many thousands of URLs, and doesn't show what Googlebot has indexed over time.

GWT Index Status: Then there's the Google Webmaster Tools Health | Index Status, which shows a reasonable quantity of data, but currently doesn't show us what Googlebot indexed and when.

... which leaves webserver-logfiles.

And Logfiles Can Tell Us What Exactly?

Logfiles track requests, so we can see those URLs that Googlebot requested - not exactly the same as seeing the URLs that Googlebot indexed, but it does give us a useful indication of what Googlebot has been up-to whilst visiting our websites.

Being more specific, logfiles can tell us which searchbots have made the most requests, provide a list of user-agents used by a particular searchbot, show the HTTP responses that the searchbots have received - and supply a list of all the URLs that a searchbot has requested over a period of time.

But if a searchbot has requested 10,000 or 100,000 URL's, how can we practically make sense of such a long list of URLs?

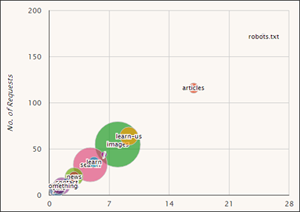

One way is to group such URL's by "top-level section". For example:

| URL | Top-Level Section |

|---|---|

| http://www.example.org/article/searchbots-rule/ | "article" |

| http://www.example.org/news/Bingbot-ate-my-hamster/ | "news" |

| http://www.example.org/learn/searchbots-are-your-friend/ | "learn" |

And, we can then visualise such groupings (click to see a working demonstration):

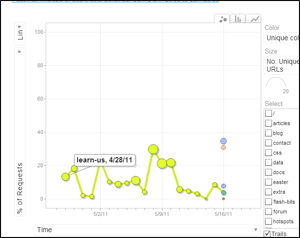

And what's more, we can animate this over time! (click here too to see a working demonstration).

So this allows us to gain a high-level view of where Googlebot has been spending its time on our sites. This can serve as a quick sanity check - has Googlebot been spending its time where we expected? We can of course dig into the data and do the same kind of analysis within a particular section, say the "/news/" section.

Actionable Findings:

What we really want though is actions that will truly have a positive impact.

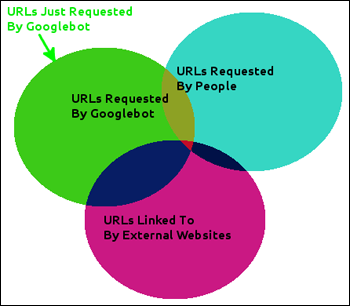

First of all taking data from logfiles, Google Analytics and Majestic SEO we can see if there are any URLs that only Googlebot (or a.n.other searchbot) requests, that people never request, and that no-one links to.

In the diagram above, the area highlighted in green contains such URLs. You might well think that such URLs are highly unlikely, but it does happen, and there can be a significant quantity of such URLs. These might be URLs with odd query string construction, product categories with very obscure filters, or simply sections of the site that no-one visits and no-one links to!

Supposing you found such a set of URL's, what should you do?

- Analyse those URLs that only Googlebot requests - are there any consistent patterns to the URLs? E.g. URL construction, sections of the site, etc - that allow you to tackle the URLs on mass?

- If historically no-one has visited these URLs and no-one requests them, why serve them at all? You could consider simply removing them (and serve "410 Gone"). You could 301 redirect them, but you'd have to balance the possible benefit of preserving some of the internal Reputation with the cost to Googlebot of having to make two requests (to the original and to the redirected URL) when crawling very obscure content!

- You could of course leave the URLs live and attempt to restrict access using robots.txt directives, but if you take this approach you should really consider applying Meta Robots tags to all pages that link to these pages - which will clearly get pretty complicated pretty quickly. (Tom Anthonys "10 .htaccess File Snippets You Should Have Handy" might help - see section '3) Robots directives').

- In addition to the above, you might need to examine your internal linking structures and the messages they send as to what is worth crawling. This too could require some analysis, using tools such as the Xenu Link Crawler.

Digging a Little Deeper:

Once we've looked for the obvious targets of URLs that only Googlebot (or whichever searchbot you're interested in) requests, it's time to look a little deeper.

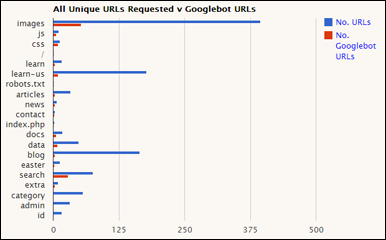

One way of doing this is to compare all the URLs requested by anyone and anything, to those URLs requested by your favourite searchbot, and compare them top-level-section by top-level-section: (another live demonstration - click to visit)

This quickly highlights sections where Googlebot may be requesting a particularly small number of URLs compared to those requested by anyone & anything - and from this you can then drill into those URLs in use in a particular section (or sub-section) of the site. i.e. you could carry out similar analysis as above - what are the URL patterns, groupings etc that you can tackle as a group?

Build for People:

So far we've discussed removing or blocking access to URLs, but this is something we'd rather not do. Really we want to be positive and provide content that people do actually want to find, share and link to, as opposed to content they're really not interested in.

For example, it may be all well and good having focus groups suggesting that people might use an obscure filter for a particular type of products, but if in reality no-one uses it and no-one links to it, you're potentially just wasting Googlebots time (and some of your resources and bandwidth). So what we want to know is - what exactly is it that people do want?

Which ultimately takes us back to building for people: (a) finding out what people want, and (b) providing it - which we discuss in Understand Relevance, Enhance Content.

How Will All This Help?

If we reduce the number of unnecessary URLs, adjust internal linking and perhaps more tightly focus on exactly what people want, Googlebot (and other search engines) should spend their time more efficiently. This could mean finding more of the content that really matters, or if it already does find all content it could mean Google serving more up-to-date content (which of course could be crucial for e-commerce sites).

Further Reading - And Viewing

Back in April 2012, Roland gave a talk at Brighton SEO on this very topic, you can read more about the day-long conference and watch a video of the talk, and read a fully annotated version of the talk.

And Finally - Any Questions? Get In Touch!

If you have any questions about any of the content in this post, or regarding our Searchbot Analysis & Visualisation tools, please get in touch: email (roland [at] cloudshapes [dot] co [dot] uk), or use the contact form.